WEEK 1

7 - 13 JUN

We had an online meeting with Mentors and decided split the project in two main areas:

- Machine Learning algorythm using TensorFlow, deploying by Tarun

- Server work and connectivity between the code and the Aztec glyphs app hosted in Uni. Oregon’s servers (by me)

We have decided if we could have a functional prototype before the mid evaluation project.

Then, a local copy of all glyphs hosted in the Web app was provided by Stephanie for a first review. I get in contact with the server system admin Len for specifications and evaluating the best way for server connection without compromise data or files. All the project work would be dumped in U. Oregon’s server.

The main concept for the project is:

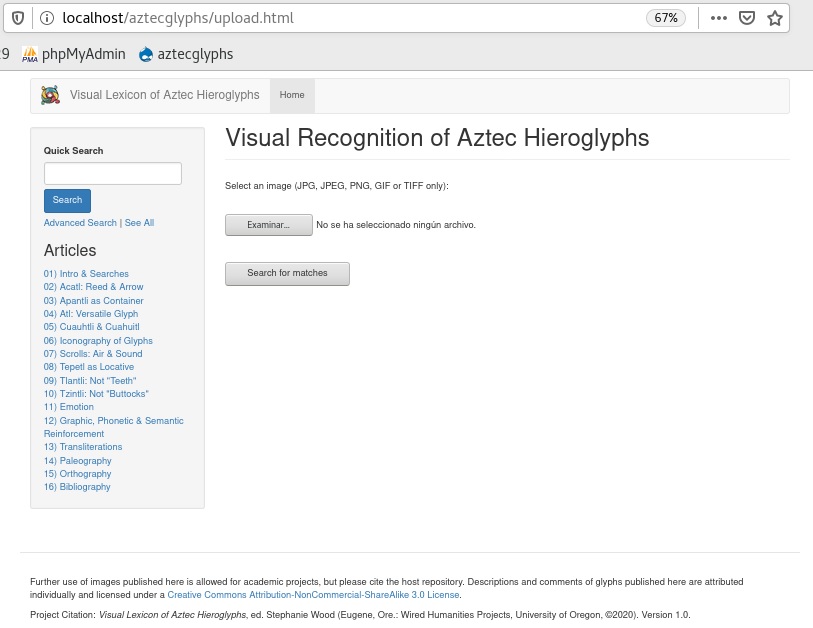

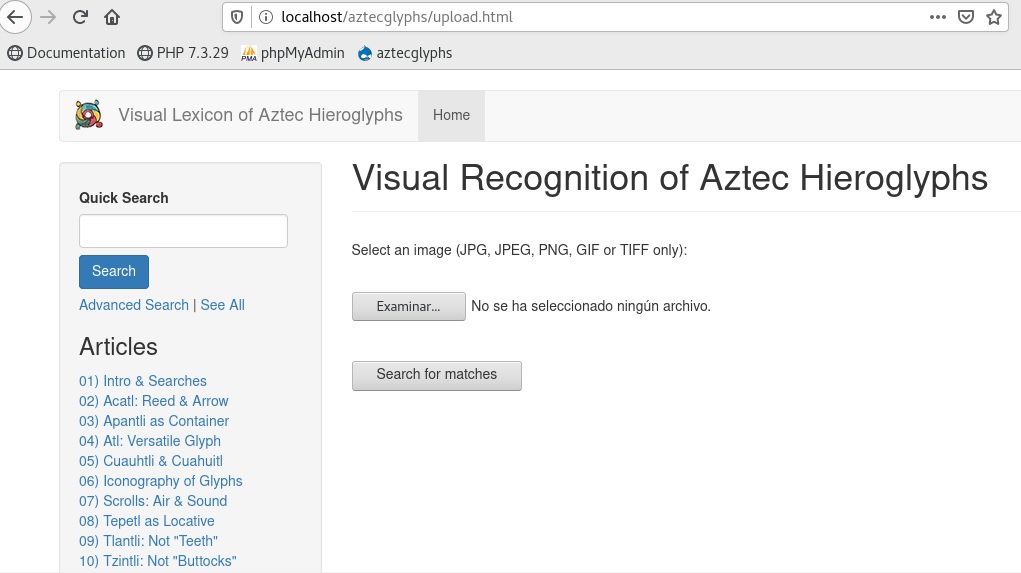

- Integrate a form or end-user webpage for upload images.

- Provide images to the prototype via API.

- A prototype working with CPU (non-GPU).

- The result of prediction comes back to the user via webpage

- New user images are stored in filesystem.

WEEK 2

14 - 20 JUN

I was in contact with Len, U. Oregon Admin Server for further specifications. We need to deploy a development environment as follows:

- Create a Virtual Machine with 2 cores and 4 GB RAM.

- RedHat 7 Enterprise or CentOS for a non-GPU enviroment as OS.

- Do not reach more than 40 GB of virtual disk availability.

An initial Machine Learning protype via Google Colab was provided for the team by Tarun.

WEEK 3

21 - 27 JUN

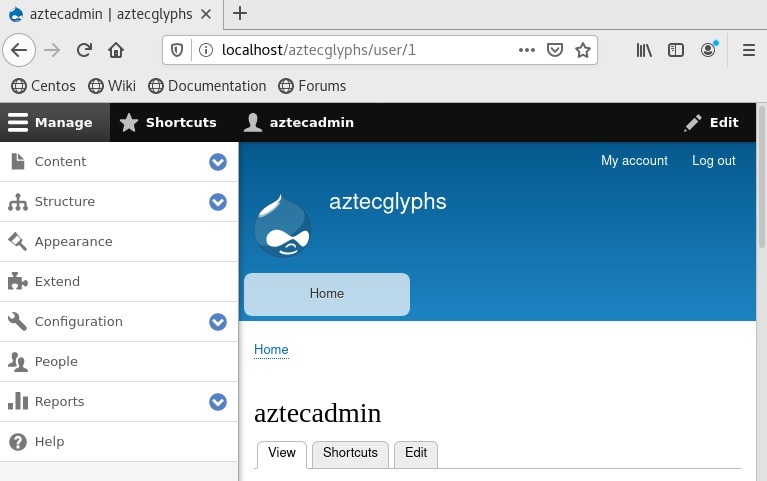

At this point and reviewed glyphs labels and storage, I started to do:

- Create a couple of VM with CentOS 7 and Ubuntu 20.4, just in case.

- Config a LAMP (Linux-Apache-MySQL-PHP) in them

- Install and config Drupal 8/9 structure for image info stored in the DB (MariaDB)

STARTING POINT:

VM: 2 cores; 4 GB RAM; 40 GB HD

Build Operating System (CentOS 7): 3.10.0-957.1.3.el7.x86_64

Root & adminuser accounts

- Pre-commands:

sudo yum update

reboot

CONFIG LAMP (as adminuser)

- MariaDB 10.5

sudo rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY*

sudo yum -y install epel-release

sudo tee /etc/yum.repos.d/MariaDB.repo<<EOF

[mariadb]

name = MariaDB

baseurl = http://yum.mariadb.org/10.5/centos7-amd64

gpgkey=https://yum.mariadb.org/RPM-GPG-KEY-MariaDB

gpgcheck=1

EOF

sudo yum makecache fast

sudo yum -y install MariaDB-server MariaDB-client

sudo systemctl start mariadb

sudo systemctl enable mariadb

sudo mysql_secure_installation

/* set root pwd & enable root authentication */

- PHP 7.3.29

sudo yum -y install httpd

sudo systemctl start httpd.service

sudo systemctl enable httpd.service

sudo firewall-cmd --permanent --zone=public --add-service=http

sudo firewall-cmd --permanent --zone=public --add-service=https

sudo firewall-cmd --reload

sudo rpm -Uvh http://rpms.remirepo.net/enterprise/remi-release-7.rpm

sudo yum -y install yum-utils

sudo yum-config-manager --enable remi-php73

sudo yum -y install php php-opcache

sudo systemctl restart httpd.service

sudo yum -y install php-mysqlnd php-pdo

sudo yum -y install php-gd php-ldap php-odbc php-pear php-xml php-xmlrpc php-mbstring php-soap curl curl-devel

sudo systemctl restart httpd.service

- PHPMyAdmin 4.4.15.10

yum -y install phpMyAdmin

vi /etc/httpd/conf.d/phpMyAdmin.conf

/* replace:

# Apache 2.4

# <RequireAny>

# Require ip 127.0.0.1

# Require ip ::1

# </RequireAny>

Require all granted

*/

systemctl restart httpd.service

- DRUPAL 9.2.1

sudo yum install -y policycoreutils-python wget

sudo wget --content-disposition https://www.drupal.org/download-latest/tar.gz

sudo tar xf drupal-9.2.1.tar.gz -C /var/www/html/

sudo mv /var/www/html/drupal-9.2.1/ /var/www/html/aztecglyphs

sudo chown -R apache: /var/www/html/aztecglyphs/sites/default/

sudo semanage fcontext -a -t httpd_sys_rw_content_t "/var/www/html/aztecglyphs/sites/default(/.*)?"

sudo restorecon -R /var/www/html/aztecglyphs/sites/default/

sudo yum install -y php-pecl-apcu php-gd php-mbstring php-opcache php-pecl-uploadprogress php-xml

sudo yum install -y php-mysqlnd

sudo systemctl reload httpd

sudo vi /etc/httpd/conf.d/aztecglyphs.conf

/* add:

<Directory /var/www/html/aztecglyphs>

AllowOverride all

</Directory>

*/

sudo systemctl reload httpd

WEEK 4

28 JUN - 4 JUL

This week I would prepare the structure of folders and DB objects, as in U. Oregon server

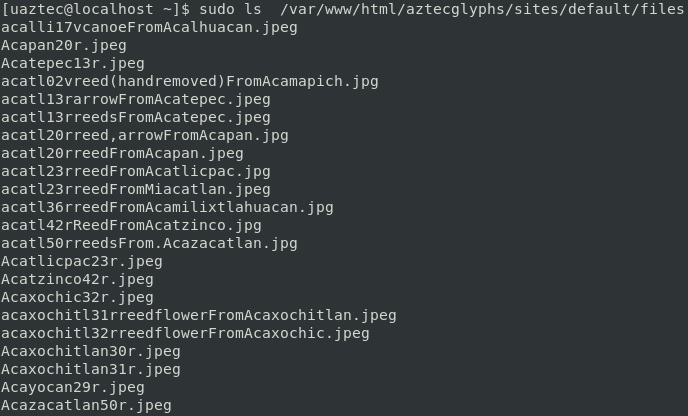

- The glyph images are hosted in /var/www/html/aztecglyphs/sites/default/files

- More accurated new glyphs was provided by Stephanie as a ZIP.

- I create a new database labeled: aztecglyphs

- I create the table for images info stored, labeled: file_managed

CREATE AZTECGLYPH DB

mysql -u root -p

MariaDB> create database aztecglyphs charset utf8mb4 collate utf8mb4_unicode_ci;

MariaDB> create user aztecglyphs@localhost identified by 'pwd';

MariaDB> exit

Open http://localhost/aztecglyphs

- Set “Standar” installation

- DB name: aztecglyphs

- DB user: aztecglyphs

- DB pwd: ‘pwd’

Next step:

- Set Site name: aztecglyphs

- Site email: aztecglyphs@localhost

- Admin user: aztecadmin

- Admin pwd: ‘pwd’

LOAD NEW GLYPH IMAGES IN DRUPAL FOLDER

CREATE TABLE FOR FILE METADATA

mysql -u root -p

MariaDB> use aztecglyphs

MariaDB> CREATE TABLE IF NOT EXISTS `file_managed` (

`fid` int(10) unsigned NOT NULL COMMENT 'File ID.',

`uid` int(10) unsigned NOT NULL DEFAULT '0' COMMENT 'The users.uid of the user who is associated with the file.',

`filename` varchar(255) NOT NULL DEFAULT '' COMMENT 'Name of the file with no path components. This may differ from the basename of the URI if the file is renamed to avoid overwriting an existing file.',

`uri` varchar(255) CHARACTER SET utf8 COLLATE utf8_bin NOT NULL DEFAULT '' COMMENT 'The URI to access the file (either local or remote).',

`filemime` varchar(255) NOT NULL DEFAULT '' COMMENT 'The file’s MIME type.',

`filesize` bigint(20) unsigned NOT NULL DEFAULT '0' COMMENT 'The size of the file in bytes.',

`status` tinyint(4) NOT NULL DEFAULT '0' COMMENT 'A field indicating the status of the file. Two status are defined in core: temporary (0) and permanent (1). Temporary files older than DRUPAL_MAXIMUM_TEMP_FILE_AGE will be removed during a cron run.',

`timestamp` int(10) unsigned NOT NULL DEFAULT '0' COMMENT 'UNIX timestamp for when the file was added.',

`type` varchar(50) NOT NULL DEFAULT 'undefined' COMMENT 'The type of this file.'

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COMMENT='Stores information for uploaded files.';

MariaDB> ALTER TABLE `file_managed` ADD PRIMARY KEY (`fid`), ADD UNIQUE KEY `uri` (`uri`), ADD KEY `uid` (`uid`), ADD KEY `status` (`status`), ADD KEY `timestamp` (`timestamp`), ADD KEY `file_type` (`type`);

/*

Indexes for dumped tables

Indexes for table `file_managed`

*/

MariaDB> ALTER TABLE `file_managed` MODIFY `fid` int(10) unsigned NOT NULL AUTO_INCREMENT COMMENT 'File ID.';

/*

AUTO_INCREMENT for dumped tables

AUTO_INCREMENT for table `file_managed`

*/

MariaDB> exit

WEEK 5

5 - 11 JUL

This week I am going to import the updated model provided by Tarun.

- Install Python 3.7 (or Miniconda3-py39) and neccesary libraries used by ML model.

- Export Colab notebook and import to Jupyter notebook.

- Have a success import Machine Learning model to the VM filesystem.

- Test if running is OK.

PYTHON 3.7

sudo yum install gcc openssl-devel bzip2-devel libffi-devel zlib-devel

cd /usr/src

sudo wget https://www.python.org/ftp/python/3.7.9/Python-3.7.9.tgz

sudo tar xzf Python-3.7.9.tgz

cd Python-3.7.9

sudo ./configure --enable-optimizations

sudo make altinstall

sudo rm /usr/src/Python-3.7.9.tgz

alias python3='/usr/local/bin/python3.7'

alias pip='/usr/local/bin/pip3.7'

TENSORFLOW 2.3

cd $HOME

python3 -m ensurepip

python3 -m venv --system-site-packages ./venv

source ./venv/bin/activate

(venv)$ pip install --upgrade pip

(venv)$ pip install tensorflow==2.3.0

(venv)$ python3 -c "import tensorflow as tf;print(tf.reduce_sum(tf.random.normal([1000, 1000])))"

(venv)$ deactivate

IMPORT MOBILENET PROTOTYPE

https://colab.research.google.com/drive/1rUA51e5Wz-VxsuNOXkfwIcD8PPasXMAG?usp=sharing

WEEK 6 (First Evaluation)

12 - 18 JUL

INSTALLING KERAS 2.4 AND LIBRARIES

pip install keras==2.4

pip install pillow

pip install matplotlib

pip install opencv-python

- Create directory for user uploads (temporally as 777)

sudo mkdir /var/www/html/aztecglyphs/sites/default/useruploads

sudo chown apache:apache /var/www/html/aztecglyphs/sites/default/useruploads

sudo chmod 777 /var/www/html/aztecglyphs/sites/default/useruploads

- MOBILENET_AZTECPLYPHS.PY

Downloaded from Colab via Save as .py and place in $HOME/Downloads (temporally)

adapt code to local filesystem

# -*- coding: utf-8 -*-

"""MobileNet2.ipynb

Automatically generated by Colaboratory.

Original file is located at

https://colab.research.google.com/drive/1rUA51e5Wz-VxsuNOXkfwIcD8PPasXMAG

"""

# Comment googledrive (now it's local)

#from google.colab import drive

#drive.mount('/content/drive/')

# Path of the input image.

path='/var/www/html/aztecglyphs/sites/default/useruploads/example.jpg'

result_path='/var/www/html/aztecglyphs/sites/default/useruploads/result.jpg'

import os

import keras

from keras.preprocessing import image

from keras.applications.imagenet_utils import decode_predictions, preprocess_input

from keras.models import Model

import numpy as np

import tensorflow as tf

base_models=tf.keras.applications.MobileNet()

def load_image(path):

img = image.load_img(path, target_size=base_models.input_shape[1:3])

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

return img, x

feat_extractor = Model(inputs=base_models.input, outputs=base_models.get_layer("reshape_2").output)

# Just set this path to the path of the directory where dataset is stored.

images_path = '/var/www/html/aztecglyphs/sites/default/files'

image_extensions = ['.jpg', '.png', '.jpeg']

max_num_images = 10000

images = [os.path.join(dp, f) for dp, dn, filenames in os.walk(images_path) for f in filenames if os.path.splitext(f)[1].lower() in image_extensions]

if max_num_images < len(images):

images = [images[i] for i in sorted(random.sample(xrange(len(images)), max_num_images))]

print("keeping %d images to analyze" % len(images))

import time

tic = time.clock()

features = []

for i, image_path in enumerate(images):

if i % 500 == 0:

toc = time.clock()

elap = toc-tic;

print("analyzing image %d / %d. Time: %4.4f seconds." % (i, len(images),elap))

tic = time.clock()

img, x = load_image(image_path);

feat = feat_extractor.predict(x)[0]

features.append(feat)

print('finished extracting features for %d images' % len(images))

##Enter Path here

img, x = load_image(path);

imga = feat_extractor.predict(x)[0]

from scipy.spatial import distance

def get_closest_images1(imga, num_results=5):

distances = [ distance.cosine(imga, feat) for feat in features ]

idx_closest = sorted(range(len(distances)), key=lambda k: distances[k])[1:num_results+1]

return idx_closest

def get_concatenated_images(indexes, thumb_height):

thumbs = []

for idx in indexes:

img = image.load_img(images[idx])

img = img.resize((int(img.width * thumb_height / img.height), thumb_height))

thumbs.append(img)

concat_image = np.concatenate([np.asarray(t) for t in thumbs], axis=1)

return concat_image

results_image = get_concatenated_images(get_closest_images1(imga), 200)

import matplotlib.pyplot as plt

import cv2

import matplotlib.pyplot as plt

r=cv2.imread(path)

result=cv2.imwrite(result_path, results_image)

if result==True:

print('File saved successfully')

else:

print('Error in saving file')

plt.imshow(r)

plt.figure(figsize = (16,12))

plt.imshow(results_image)

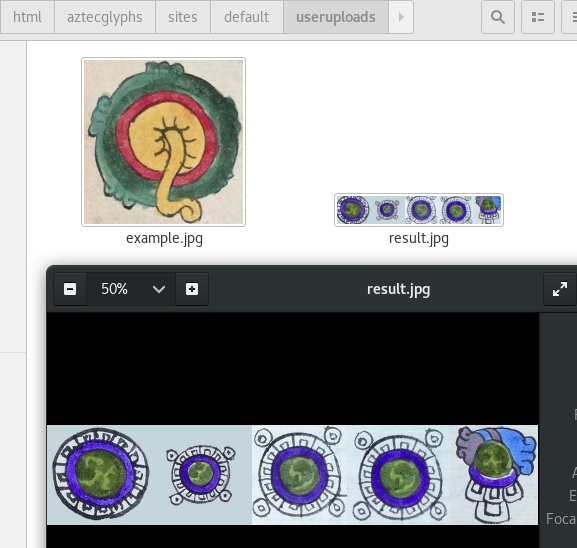

- File to compare and run the python script

sudo cp /var/www/html/aztecglyphs/sites/default/files/Xico20vSIMPLEX.jpg /var/www/html/aztecglyphs/sites/default/useruploads/example.jpg

python3 $HOME/Downloads/mobilenet_aztecglyphs.py

- Console OUTPUT

[uaztec@localhost Downloads]$ python3 /home/uaztec/Downloads/mobilenet_aztecglyphs.py

2021-07-16 03:25:27.081482: W tensorflow/stream_executor/platform/default/dso_loader.cc:59] Could not load dynamic library 'libcudart.so.10.1'; dlerror: libcudart.so.10.1: cannot open shared object file: No such file or directory

2021-07-16 03:25:27.081504: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

Using TensorFlow backend.

2021-07-16 03:25:27.843362: W tensorflow/stream_executor/platform/default/dso_loader.cc:59] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory

2021-07-16 03:25:27.843386: W tensorflow/stream_executor/cuda/cuda_driver.cc:312] failed call to cuInit: UNKNOWN ERROR (303)

2021-07-16 03:25:27.843411: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (localhost.localdomain): /proc/driver/nvidia/version does not exist

2021-07-16 03:25:27.843571: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN)to use the following CPU instructions in performance-critical operations: AVX2

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2021-07-16 03:25:27.848319: I tensorflow/core/platform/profile_utils/cpu_utils.cc:104] CPU Frequency: 2904000000 Hz

2021-07-16 03:25:27.848468: I tensorflow/compiler/xla/service/service.cc:168] XLA service 0x4f28fd0 initialized for platform Host (this does not guarantee that XLA will be used). Devices:

2021-07-16 03:25:27.848481: I tensorflow/compiler/xla/service/service.cc:176] StreamExecutor device (0): Host, Default Version

keeping 1261 images to analyze

/home/uaztec/Downloads/mobilenet_aztecglyphs.py:44: DeprecationWarning: time.clock has been deprecated in Python 3.3 and will be removed from Python 3.8: use time.perf_counter or time.process_time instead

tic = time.clock()

/home/uaztec/Downloads/mobilenet_aztecglyphs.py:48: DeprecationWarning: time.clock has been deprecated in Python 3.3 and will be removed from Python 3.8: use time.perf_counter or time.process_time instead

toc = time.clock()

analyzing image 0 / 1261. Time: 0.0000 seconds.

/home/uaztec/Downloads/mobilenet_aztecglyphs.py:51: DeprecationWarning: time.clock has been deprecated in Python 3.3 and will be removed from Python 3.8: use time.perf_counter or time.process_time instead

tic = time.clock()

analyzing image 500 / 1261. Time: 24.9500 seconds.

analyzing image 1000 / 1261. Time: 24.8900 seconds.

finished extracting features for 1261 images

File saved successfully

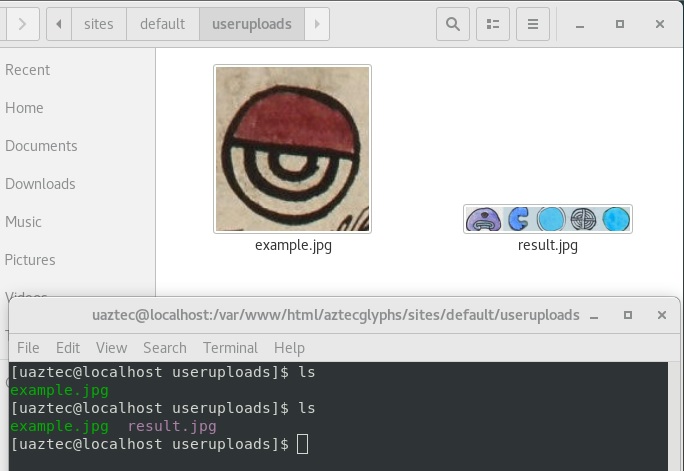

Folder OUTPUT

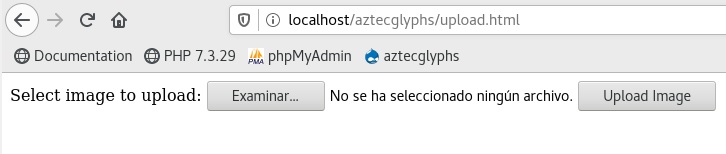

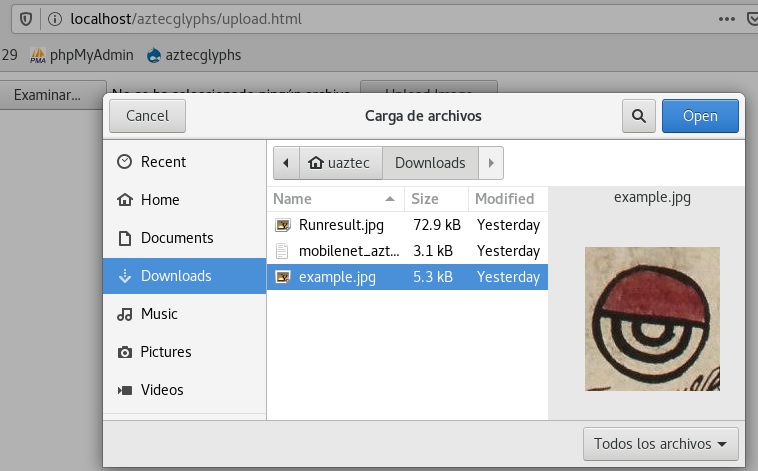

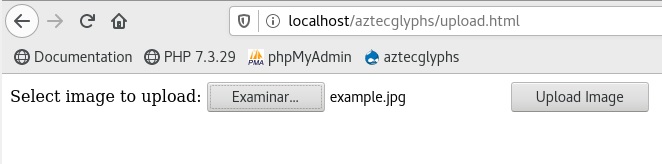

- CREATE AN UPLOAD FORM (via PHP)

sudo vi /var/www/html/aztecglyphs/upload.html

<!DOCTYPE html>

<html>

<body>

<form action="upload.php" method="post" enctype="multipart/form-data">

Select image to upload:

<input type="file" name="fileToUpload" id="fileToUpload">

<input type="submit" value="Upload Image" name="submit">

</form>

</body>

</html>

sudo vi /var/www/html/aztecglyphs/upload.php

<?php

$target_dir = "/var/www/html/aztecglyphs/sites/default/useruploads/";

$target_file = $target_dir . basename($_FILES["fileToUpload"]["name"]);

$uploadOk = 1;

$imageFileType = strtolower(pathinfo($target_file,PATHINFO_EXTENSION));

// Check if image file is a actual image or fake image

if(isset($_POST["submit"])) {

$check = getimagesize($_FILES["fileToUpload"]["tmp_name"]);

if($check !== false) {

echo "File is an image - " . $check["mime"] . ".";

$uploadOk = 1;

} else {

echo "File is not an image.";

$uploadOk = 0;

}

}

// Check if file already exists

if (file_exists($target_file)) {

echo "Sorry, file already exists.";

$uploadOk = 0;

}

// Check file size

if ($_FILES["fileToUpload"]["size"] > 500000) {

echo "Sorry, your file is too large.";

$uploadOk = 0;

}

// Allow certain file formats

if($imageFileType != "jpg" && $imageFileType != "png" && $imageFileType != "jpeg"

&& $imageFileType != "gif" ) {

echo "Sorry, only JPG, JPEG, PNG & GIF files are allowed.";

$uploadOk = 0;

}

// Check if $uploadOk is set to 0 by an error

if ($uploadOk == 0) {

echo "Sorry, your file was not uploaded.";

// if everything is ok, try to upload file

} else {

if (move_uploaded_file($_FILES["fileToUpload"]["tmp_name"], $target_file)) {

echo "The file ". htmlspecialchars( basename( $_FILES["fileToUpload"]["name"])). " has been uploaded.";

} else {

echo "Sorry, there was an error uploading your file.";

}

}

?>

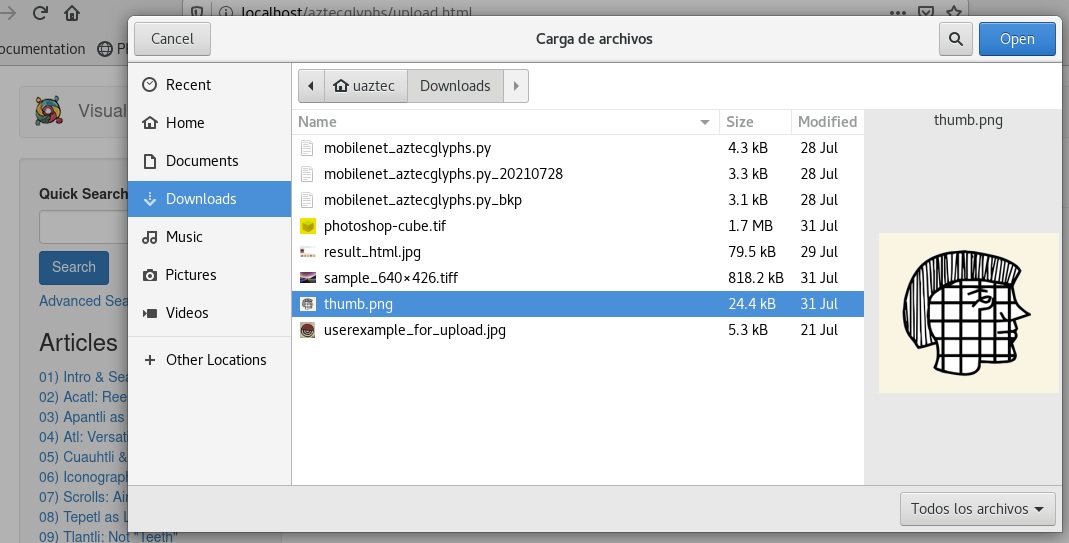

- Basic Upload Form

- Choose a file

- Select “Upload Image”

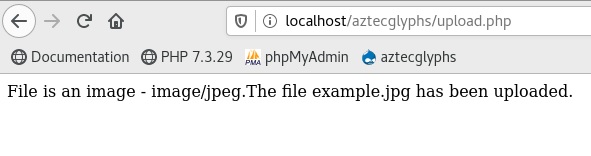

- File upload success

- Check if it was uploaded

- Now, run the python script manually (temporally)

python3 $HOME/Downloads/mobilenet_aztecglyphs.py

The RESULT is in the same folder

- Now, let’s change upload.html as in Visual Lexicon site

WEEK 7

19 - 25 JUL

This week I’m going to edit python script for generate itself a ‘result.html’ with glyphs path & get name from filename info.

- MOBILENET_AZTECPLYPHS.PY

adapt code for generate itself a ‘result.html’ file

#!/usr/bin/python

# -*- coding: utf-8 -*-

"""MobileNet2.ipynb

Automatically generated by Colaboratory.

Original file is located at

https://colab.research.google.com/drive/1rUA51e5Wz-VxsuNOXkfwIcD8PPasXMAG

"""

# Path of the input image.

path='/var/www/html/aztecglyphs/sites/default/useruploads/example'

result_path='/var/www/html/aztecglyphs/sites/default/useruploads/result.jpg'

import os

import keras

from keras.preprocessing import image

from keras.applications.imagenet_utils import decode_predictions, preprocess_input

from keras.models import Model

import numpy as np

import re

import tensorflow as tf

base_models=tf.keras.applications.MobileNet()

def load_image(path):

img = image.load_img(path, target_size=base_models.input_shape[1:3])

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

return img, x

feat_extractor = Model(inputs=base_models.input, outputs=base_models.get_layer("reshape_2").output)

# Just set this path to the path of the directory where dataset is stored.

images_path = '/var/www/html/aztecglyphs/sites/default/files'

image_extensions = ['.jpg', '.png', '.jpeg', '.gif', '.tiff']

max_num_images = 10000

images = [os.path.join(dp, f) for dp, dn, filenames in os.walk(images_path) for f in filenames if os.path.splitext(f)[1].lower() in image_extensions]

images_website = [os.path.join('/aztecglyphs/sites/default/files', f) for dp, dn, filenames in os.walk(images_path) for f in filenames if os.path.splitext(f)[1].lower() in image_extensions]

if max_num_images < len(images):

images = [images[i] for i in sorted(random.sample(xrange(len(images)), max_num_images))]

print("keeping %d images to analyze" % len(images))

import time

tic = time.clock()

features = []

for i, image_path in enumerate(images):

if i % 500 == 0:

toc = time.clock()

elap = toc-tic;

print("analyzing image %d / %d. Time: %4.4f seconds." % (i, len(images),elap))

tic = time.clock()

img, x = load_image(image_path);

feat = feat_extractor.predict(x)[0]

features.append(feat)

print('finished extracting features for %d images' % len(images))

##Enter Path here

img, x = load_image(path);

imga = feat_extractor.predict(x)[0]

from scipy.spatial import distance

def get_closest_images1(imga, num_results=5):

distances = [ distance.cosine(imga, feat) for feat in features ]

idx_closest = sorted(range(len(distances)), key=lambda k: distances[k])[1:num_results+1]

return idx_closest

import sys

original_stdout=sys.stdout

# Creating result.html with user img first

with open('/var/www/html/aztecglyphs/result.html','w') as f:

sys.stdout = f

print('<!DOCTYPE html><html><head><style>')

print('* {')

print('box-sizing:border-box;')

print('}')

print('.column {')

print(' float:left;')

print(' width:100;')

print(' padding:5px;')

print('}')

print('.row::after {')

print(' content:"";')

print(' clear:both;')

print(' display:table;')

print('}')

print('</style></head><body>')

print('<p>This is your uploaded image:</p>')

print('<div class="row"><img src="/aztecglyphs/sites/default/useruploads/example" alt="" height="200"></div>')

print('<br><p>And these are the 5 most accurate glyphs by the moment:</p>')

print('<div class="row">')

sys.stdout=original_stdout

# each image matched, add file url and split glyphname (first part of the filename)

def get_concatenated_images(indexes, thumb_height):

thumbs = []

for idx in indexes:

with open ('/var/www/html/aztecglyphs/result.html','a') as f:

sys.stdout=f

print('<div class="column"><img src="',images_website[idx],'" alt="" height="120">')

head, tail=os.path.split(images_website[idx])

result = re.split('[0-9]|[.]', tail, maxsplit=1)

print('<p style="text-align:center;">',result[0],'</p></div>')

sys.stdout=original_stdout

img = image.load_img(images[idx])

img = img.resize((int(img.width * thumb_height / img.height), thumb_height))

thumbs.append(img)

concat_image = np.concatenate([np.asarray(t) for t in thumbs], axis=1)

return concat_image

results_image = get_concatenated_images(get_closest_images1(imga), 200)

import matplotlib.pyplot as plt

import cv2

import matplotlib.pyplot as plt

r=cv2.imread(path)

# close the html

result=cv2.imwrite(result_path, results_image)

with open('/var/www/html/aztecglyphs/result.html','a') as f:

sys.stdout = f

print('</div></body></html>')

sys.stdout=original_stdout

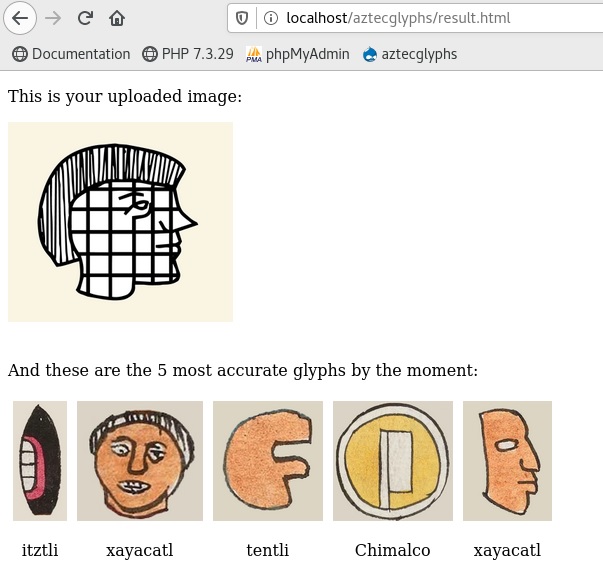

And this is all the process result

This is local result.html

<!DOCTYPE html><html><head><style>

* {

box-sizing:border-box;

}

.column {

float:left;

width:100;

padding:5px;

}

.row::after {

content:"";

clear:both;

display:table;

}

</style></head><body>

<p>This is your uploaded image:</p>

<div class="row"><img src="/aztecglyphs/sites/default/useruploads/example" alt="" height="200"></div>

<br><p>And these are the 5 most accurate glyphs by the moment:</p>

<div class="row">

<div class="column"><img src=" /aztecglyphs/sites/default/files/itztli30robsidianknifeFromTzihuinquilocan.jpeg " alt="" height="120">

<p style="text-align:center;"> itztli </p></div>

<div class="column"><img src=" /aztecglyphs/sites/default/files/xayacatl13vfaceFromQuauhixayacatitlan.jpeg " alt="" height="120">

<p style="text-align:center;"> xayacatl </p></div>

<div class="column"><img src=" /aztecglyphs/sites/default/files/tentli51rlipFromAtenco.png " alt="" height="120">

<p style="text-align:center;"> tentli </p></div>

<div class="column"><img src=" /aztecglyphs/sites/default/files/Chimalco23r.jpeg " alt="" height="120">

<p style="text-align:center;"> Chimalco </p></div>

<div class="column"><img src=" /aztecglyphs/sites/default/files/xayacatl46rfaceSIMPLEXforXayaco.png " alt="" height="120">

<p style="text-align:center;"> xayacatl </p></div>

</div></body></html>

Success!!

WEEK 8

26 JUL - 1 AUG

This week I’m going to make a fresh install of python3.9 version, use only needed libraries and adapt code to communicate with JS Socket and run python Mobilenet via FLASK

sudo wget https://www.python.org/ftp/python/3.9.5/Python-3.9.5.tgz

sudo mv Python-3.9.5,tgz /usr/lib64

cd /usr/lib64

sudo tar xvf Python-3.9.5.tgz

sudo ./configure --enable-optimizations

sudo make altinstall

sudo alias python3=python3.9

sudo python3 -m ensurepip

sudo python3 -m venv --system-site-packages ./env

sudo source ./env/bin/activate

(env)$ pip install --upgrade pip

(env)$ pip3 install tensorflow==2.3.0

- Now I am going to create folder structure for API.

PATH: /var/www/html/aztecglyphs/

rename python file

./aztecglyphsrecognition.py

create index html file

./templates/aztecglyphsrecognition.html

create blank sheet before get results

./templates/blank.html

add a gear gif while results are printed on the screen

./static/gear.gif

add a folder or soft link to images dataset (for us: /var/www/html/aztecglyphs/sites/default/files/)

./static/samples/

add a folder where user uploads will be stored

./static/uploads/

Now, let’s adapt our Mobilenet project

- aztecglyphsrecognition.py

import os

import urllib.request

from flask import Flask, flash, request, redirect, url_for, render_template, session, json, jsonify

from werkzeug.utils import secure_filename

from flask_executor import Executor

from flask_socketio import SocketIO, emit

import tensorflow.keras

from tensorflow.keras.preprocessing import image

from tensorflow.keras.applications.imagenet_utils import decode_predictions, preprocess_input

from tensorflow.keras.models import Model

import numpy as np

import tensorflow as tf

from scipy.spatial import distance

import time

ALLOWED_EXTENSIONS = set(['png', 'jpg', 'jpeg', 'gif', 'webp'])

UPLOAD_FOLDER = 'static/uploads/'

def allowed_file(filename):

return '.' in filename and filename.rsplit('.', 1)[1].lower() in ALLOWED_EXTENSIONS

app = Flask(__name__)

app.secret_key = "secret key"

app.config['UPLOAD_FOLDER'] = UPLOAD_FOLDER

app.config['MAX_CONTENT_LENGTH'] = 16 * 1024 * 1024

app.config['SECRET_KEY'] = 'mySecretKey'

executor = Executor(app)

socketio = SocketIO(app)

base_models = tf.keras.applications.MobileNet()

features = []

images_website = []

feat_extractor = Model(inputs=base_models.input, outputs=base_models.get_layer("reshape_2").output)

@app.route('/')

def index():

return home()

@app.route('/', methods=['POST'])

def upload_image():

clientId = request.form.get('client_id')

files = request.files.getlist("file")

meta = json.loads(request.form.get('meta'))

for file in files:

imageId = meta[file.filename]

filename = secure_filename(file.filename)

imagePath = os.path.join(app.config['UPLOAD_FOLDER'], filename)

file.save(imagePath)

executor.submit(analyze_image, imagePath, file.filename, clientId, imageId)

return render_template('blank.html')

def home():

return render_template('aztecglyphrecognition.html')

def extract_features():

images_path = 'static/samples/'

image_extensions = ['.jpg', '.png', '.jpeg', '.gif', '.webp']

max_num_images = 10000

images = [os.path.join(dp, f) for dp, dn, filenames in os.walk(images_path) for f in filenames if os.path.splitext(f)[1].lower() in image_extensions]

images_website.clear()

images_website.extend([os.path.join(images_path, f) for dp, dn, filenames in os.walk(images_path) for f in filenames if os.path.splitext(f)[1].lower() in image_extensions])

if max_num_images < len(images):

images = [images[i] for i in sorted(random.sample(xrange(len(images)), max_num_images))]

print("keeping %d images to analyze" % len(images))

tic = time.time()

features.clear()

for i, image_path in enumerate(images):

if i % 500 == 0:

toc = time.time()

elap = toc-tic;

print("analyzing image %d / %d. Time: %4.4f seconds." % (i, len(images),elap))

tic = time.time()

img, x = load_image(image_path);

feat = feat_extractor.predict(x)[0]

features.append(feat)

print('finished extracting features for %d images' % len(images))

def analyze_image(imagePath, originalFileName, clientId, imageId):

print("analyzing image: %s!" % (imagePath))

img, x = load_image(imagePath)

imga = feat_extractor.predict(x)[0]

results = get_closest_images(imga, 5)

payload = dict()

payload['results'] = []

payload['image_id'] = imageId

for idx in results:

payload['results'].append(images_website[idx])

socketio.emit(clientId, json.dumps(payload))

def load_image(path):

img = image.load_img(path, target_size=base_models.input_shape[1:3])

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

return img, x

def get_closest_images(imga, num_results=5):

distances = [ distance.cosine(imga, feat) for feat in features ]

idx_closest = sorted(range(len(distances)), key=lambda k: distances[k])[1:num_results+1]

return idx_closest

extract_features()

WEEK 9

2 - 8 AUG

This week I am going to end the project, adding the upload-socket form and test it.

. create ./templates/blank.html

<!DOCTYPE html>

<html>

<body>

</body>

</html>

. create ./templates/aztecglyphrecognition.html

CSS style could be stored apart, but I’m going to add it in the head of the document

<!DOCTYPE html>

<html>

<head>

<title>Visual Recognition of Aztec Hieroglyphs</title>

<!--<link ="stylesheet" href="static/style.css" reltype="text/css"> -->

<style type="text/css">

body {

background-color: #fefefe;

font-family: Arial, Helvetica, sans-serif;

padding: 20px;

font-size: 14px;

}

label {

display: inline-block;

background-color: royalblue;

color: white;

padding: 0.5rem;

font-family: sans-serif;

border-radius: 0.3rem;

cursor: pointer;

margin-top: 1rem;

-webkit-touch-callout: none;

-webkit-user-select: none;

-khtml-user-select: none;

-moz-user-select: none;

-ms-user-select: none;

user-select: none;

}

.clearButton {

background-color: royalblue;

border: none;

color: white;

padding: 8px;

text-align: center;

text-decoration: none;

float: right;

clear: both;

font-size: 14px;

border-radius: 0.3rem;

margin-top: -40px;

}

.collectionContainer {

background-color: white;

margin-bottom: 20px;

border: 1px solid #ddd;

border-radius: 5px;

padding: 10px;

-webkit-box-shadow: 1px 1px 5px 2px rgba(0, 0, 0, 0.15);

box-shadow: 1px 1px 5px 2px rgba(0, 0, 0, 0.15);

}

.collectionContainer img {

border-radius: 8px;

object-fit: cover;

margin-right: 10px;

margin-bottom: 10px

}

.fileName {

font-size: 11px;

color: #555;

padding-top: 8px;

padding-bottom: 16px;

}

.arrow {

font-size: 60px;

vertical-align: bottom;

margin-top: 28px;

padding-left: 20px;

padding-right: 20px;

display: inline-block;

color: #666;

}

.gears {

vertical-align: bottom;

margin-top: 42px;

padding-left: 20px;

}

.noSelect {

-webkit-touch-callout: none;

-webkit-user-select: none;

-khtml-user-select: none;

-moz-user-select: none;

-ms-user-select: none;

user-select: none;

}

</style>

</head>

<body>

<h1>Visual Recognition of Aztec Hieroglyphs</h1>

<form method="post" action="/" id="form" enctype="multipart/form-data">

<input type="file" name="file" id="file" onchange="submitForm(this)" autocomplete="off" accept="image/gif, image/jpeg, image/png, image/webp" required hidden multiple>

<input type="hidden" name="client_id" id="client_id">

<input type="hidden" name="meta" id="meta">

<label for="file" class="noSelect">Select images to analyze</label><i> (JPG, JPEG, PNG, GIF or WEBP only)</i>

</form>

<br><br>

<button class="clearButton noSelect" id="clearButton" style="cursor:pointer">Clear results</button>

<div id="results"></div>

<div id="noResults">Click the button above to analyze the 5 most accurate glyphs per image</div>

<script src="https://code.jquery.com/jquery-3.5.1.min.js" integrity="sha256-9/aliU8dGd2tb6OSsuzixeV4y/faTqgFtohetphbbj0=" crossorigin="anonymous"></script>

<script type="text/javascript" src="https://cdnjs.cloudflare.com/ajax/libs/socket.io/4.1.3/socket.io.js"></script>

<script type="text/javascript" charset="utf-8">

function uuid() {

return 'xxxxxxxx-xxxx-4xxx-yxxx-xxxxxxxxxxxx'.replace(/[xy]/g, function (c) {

var r = Math.random() * 16 | 0, v = c == 'x' ? r : (r & 0x3 | 0x8);

return v.toString(16);

})

}

if (localStorage.getItem('client_id') === null) {

localStorage.setItem('client_id', uuid())

}

clientId = localStorage.getItem('client_id')

var socket = io.connect('http://127.0.0.1:5000');

socket.on(clientId, function (data) {

var payload = JSON.parse(data)

$('#' + payload['image_id']).html('')

for (var i = 0; i < payload['results'].length; i++) {

var img = $(document.createElement('img'))

img.attr('src', payload['results'][i])

img.attr('width', '120')

img.attr('height', 'auto')

img.attr('style', 'border: 1px solid #ddd')

$('#' + payload['image_id']).append(img)

}

})

function clear() {

$('#clearButton').hide()

$('#results').html(' ')

$('#noResults').show()

}

function submitForm(form) {

$('#noResults').hide()

$('#clearButton').show()

var meta = {}

var files = document.getElementById('file').files

for (var i = 0; i < files.length; i++) {

console.log('file name => ' + files[i].name);

var imageId = uuid()

meta[files[i].name] = imageId

var container = $(document.createElement('div'))

container.attr('class', 'collectionContainer')

var fileName = $(document.createElement('div')).html(files[i].name)

fileName.attr('class', 'fileName')

container.append(fileName)

var sourceImage = $(document.createElement('img'))

sourceImage.attr('src', URL.createObjectURL(files[i]))

sourceImage.attr('width', '120')

sourceImage.attr('height', 'auto')

sourceImage.attr('style', 'border: 1px solid #ddd')

container.append(sourceImage)

var arrow = $(document.createElement('span')).html("➩")

arrow.attr('class', 'arrow')

container.append(arrow)

var resultsContainer = $(document.createElement('span'))

resultsContainer.attr('id', imageId)

container.append(resultsContainer)

var loading = $(document.createElement('img'))

loading.attr('src', 'static/gear.gif')

loading.attr('class', 'gears')

loading.attr('width', '60')

loading.attr('height', 'auto')

resultsContainer.append(loading)

$('#results').prepend(container)

}

document.getElementById('meta').value = JSON.stringify(meta)

document.getElementById('client_id').value = localStorage.getItem('client_id')

var form = $('#form')[0]

var data = new FormData(form)

form.reset()

$.ajax({

type: "POST",

enctype: 'multipart/form-data',

url: "/",

data: data,

processData: false,

contentType: false,

cache: false,

timeout: 600000,

success: function (data) {

console.log("SUCCESS : ")

},

error: function (e) {

console.log("ERROR : ", e)

}

});

return true

}

$(document).ready(function () {

$('#clearButton').hide()

$('#clearButton').on("click", function (e) {

e.preventDefault();

e.stopPropagation();

clear()

});

})

</script>

</body>

</html>

. Upload a ./static/gear.gif

Now, I’m going to test it:

- Go to my Home folder (/var/www/html/aztecglyphs) and create a virtual environment

python3 -m venv env

- Activate the environment

source env/bin/activate

- Install dependencies

pip3 install flask flask-executor Werkzeug flask-socketio keras==2.4 pillow

- Export app name and environment for Flask

export FLASK_APP=aztecglyphrecognition

export FLASK_ENV=deployment

- Run the project

python3 -m flask run

- Wait and open website in navigator via 5000 port:

http://127.0.0.1:5000/

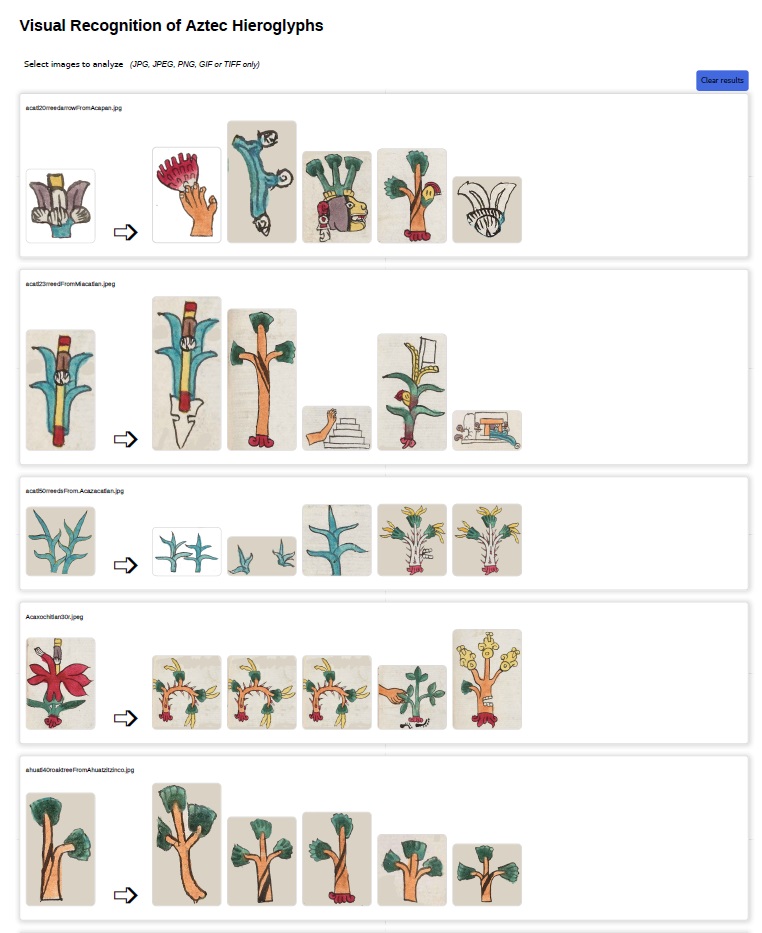

These are the results:

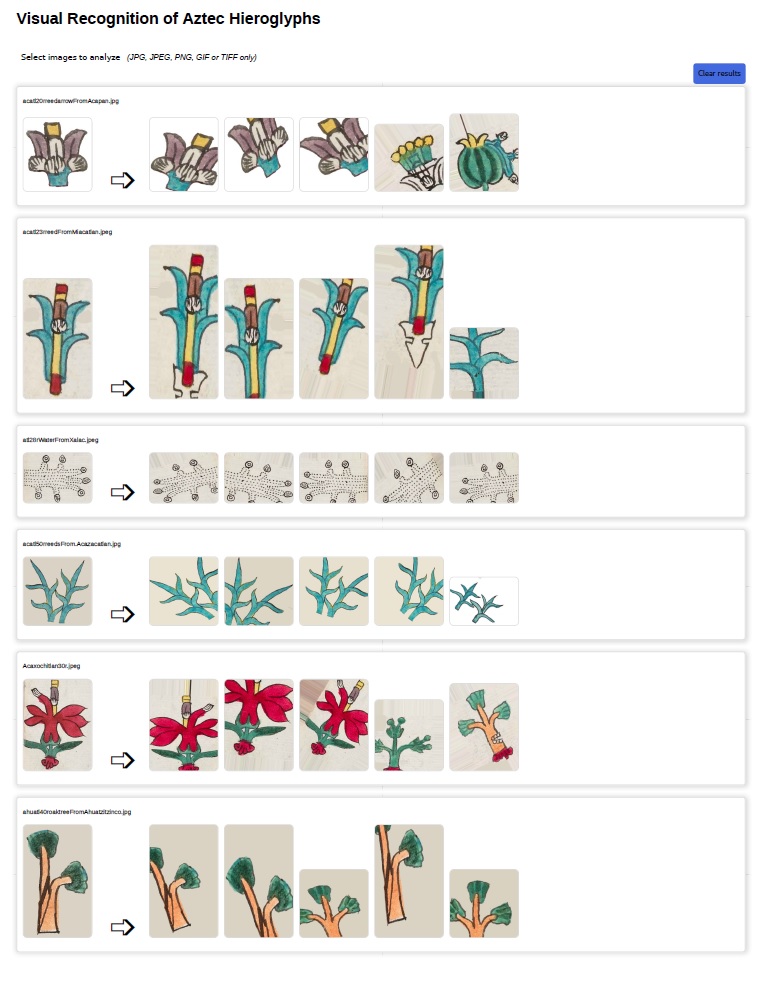

And these are the results using rotated images provided by Tarun

SUCCESS!!!

WEEK 10

9 - 15 AUG

This is the last week. I’m in contact with Stephanie and Tarun for show them my results.

Documentation and final reports.